Research Topics

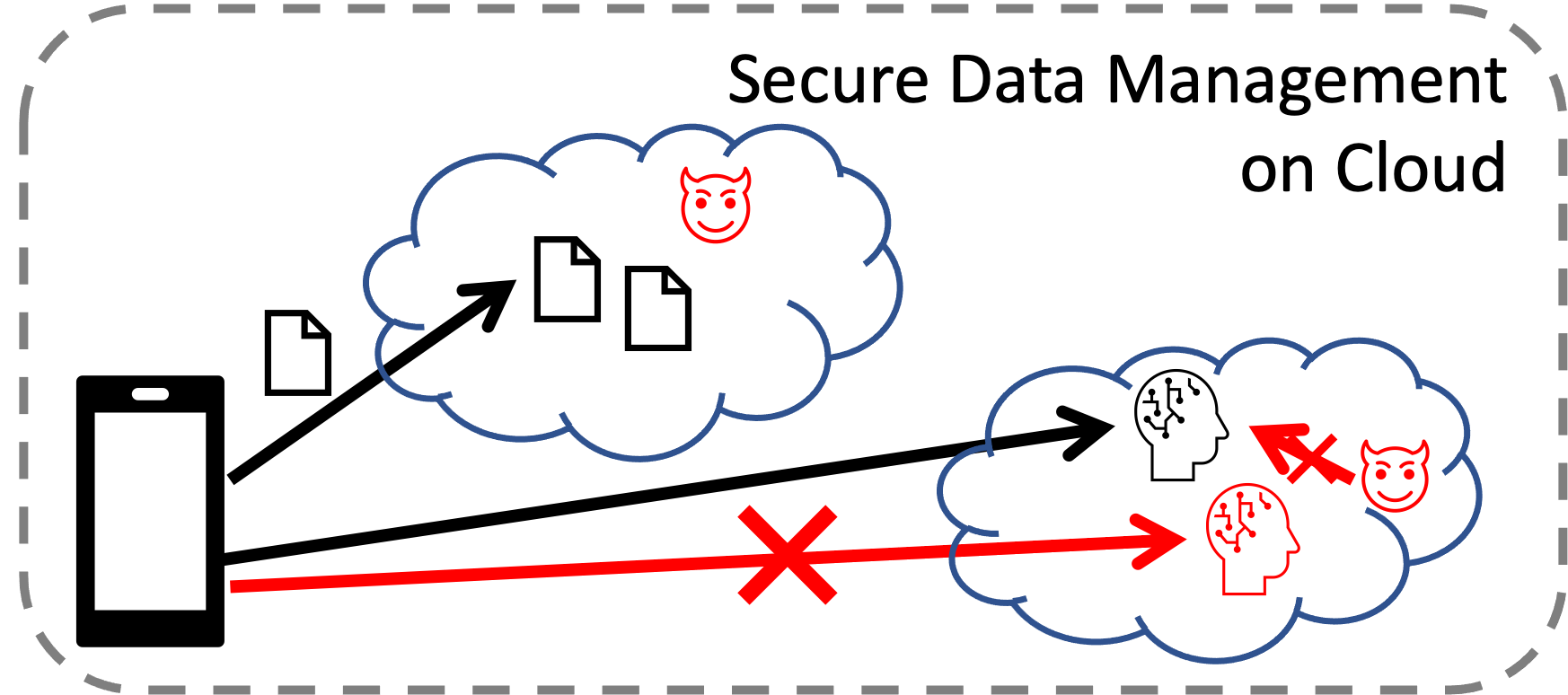

Cloud Security

It is now common and inevitable for users to store and process personal data on cloud infrastructure. Our photos, emails and documents are stored on cloud-based services, and many intelligent services such as voice command systems (e.g. Alexa, Siri, or Clova) process user inputs in the cloud. This trend raises concerns about data security in the cloud: Who can read or modify our data? We aim to design systems and techniques that enable the enterprises to securely store and process data on cloud infrastructure to which we do not have physical proximity.

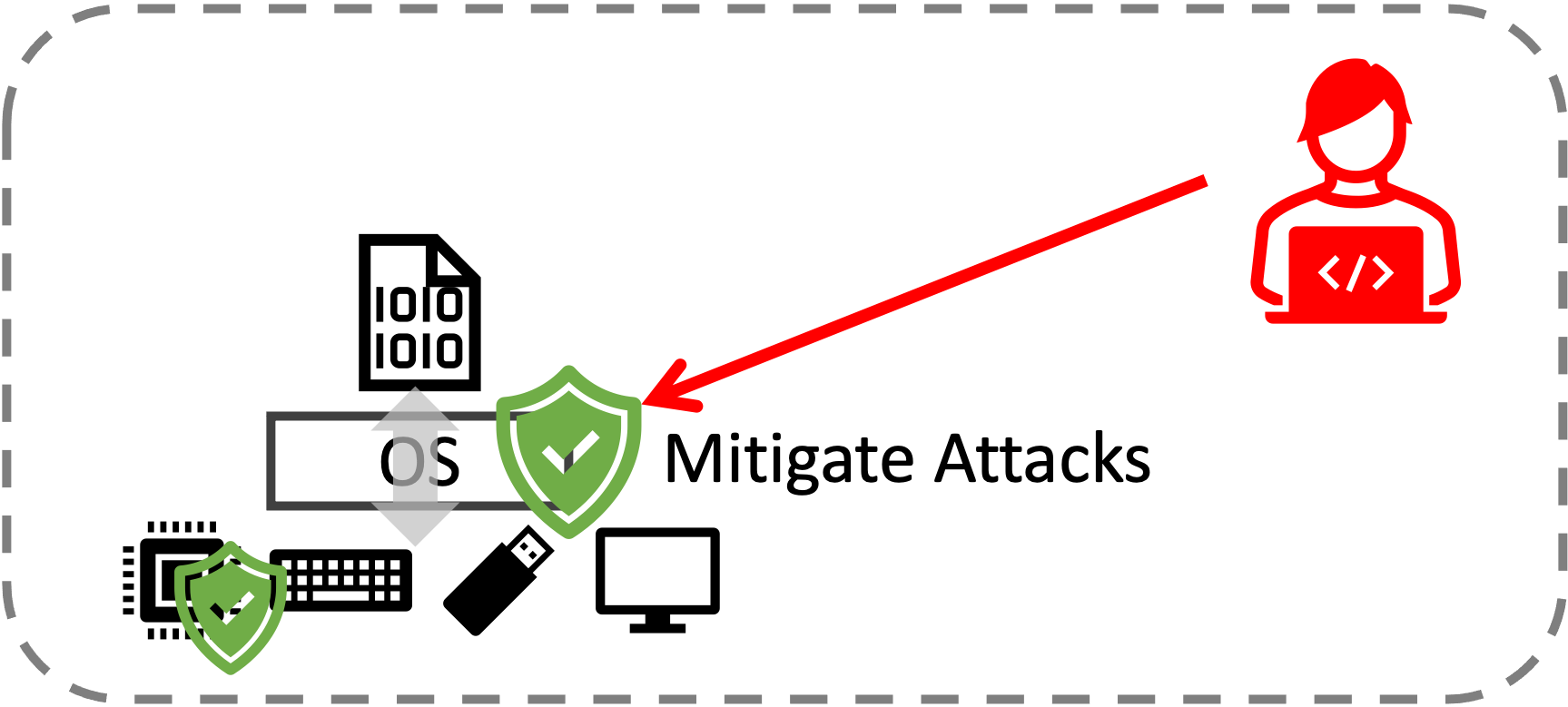

Embedded and IoT Security

Computers are everywhere. From door locks to thermostats, air conditioners to smartwatches, we interact closely with small computing devices in our daily lives. However, the security of these small computers is at risk, for several reasons, such as diversity, the difficulty of security patching, and strict limitations on computing power. We devise mechanisms to address these risks by mitigating potential attacks on these devices and ensuring the security of the data they handle.

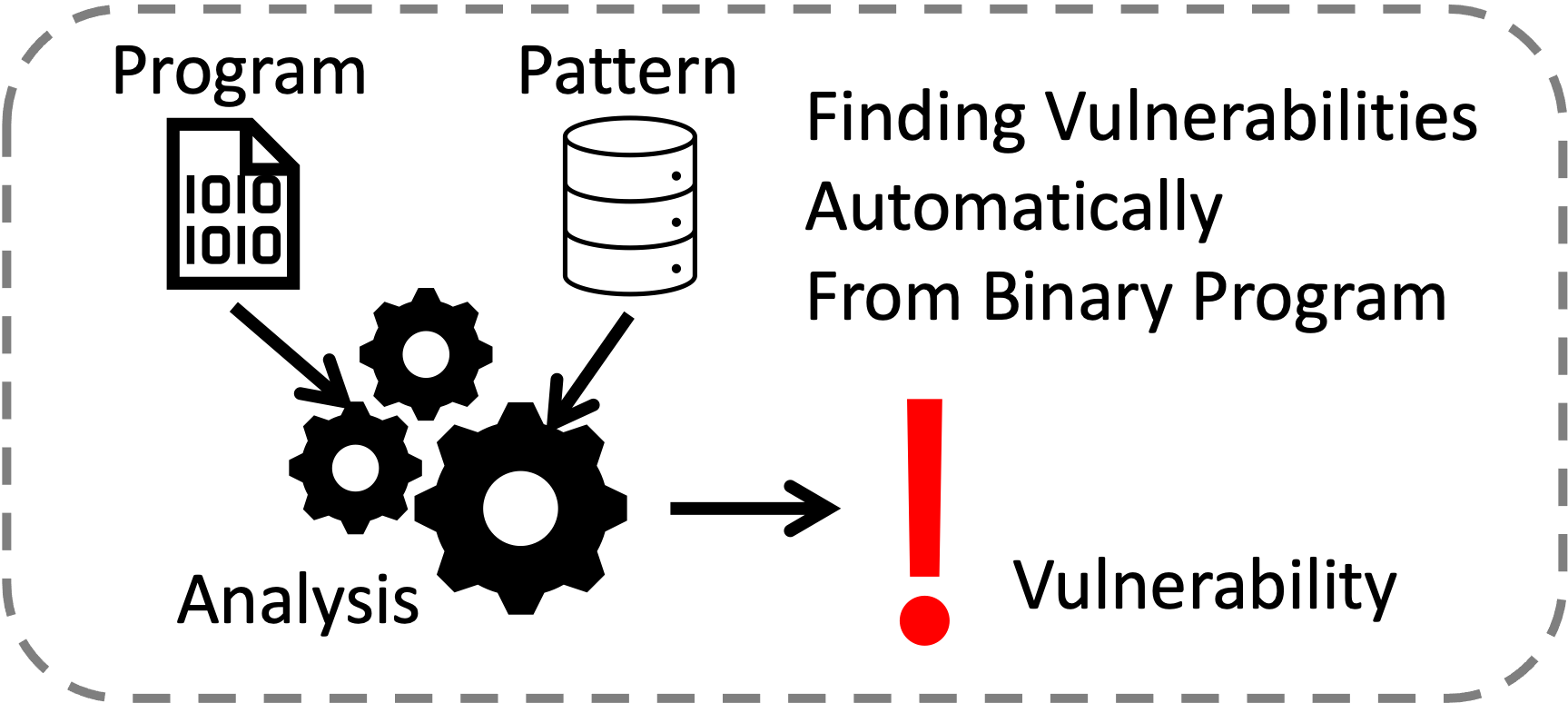

Program Analysis for Vulnerability Hunting

The programs running on computers around us are often vulnerable. Developers inevitably make mistakes when they write new software due to its complexity Some of these mistakes introduce vulnerabilities, enabling adversaries to exploit them and cause the software to misbehave. To address this problem, academia and industry found vulnerability hunting by program analysis as an effective solution. By finding vulnerabilities sooner than an adversary, we aim to fix them before they can be exploited.

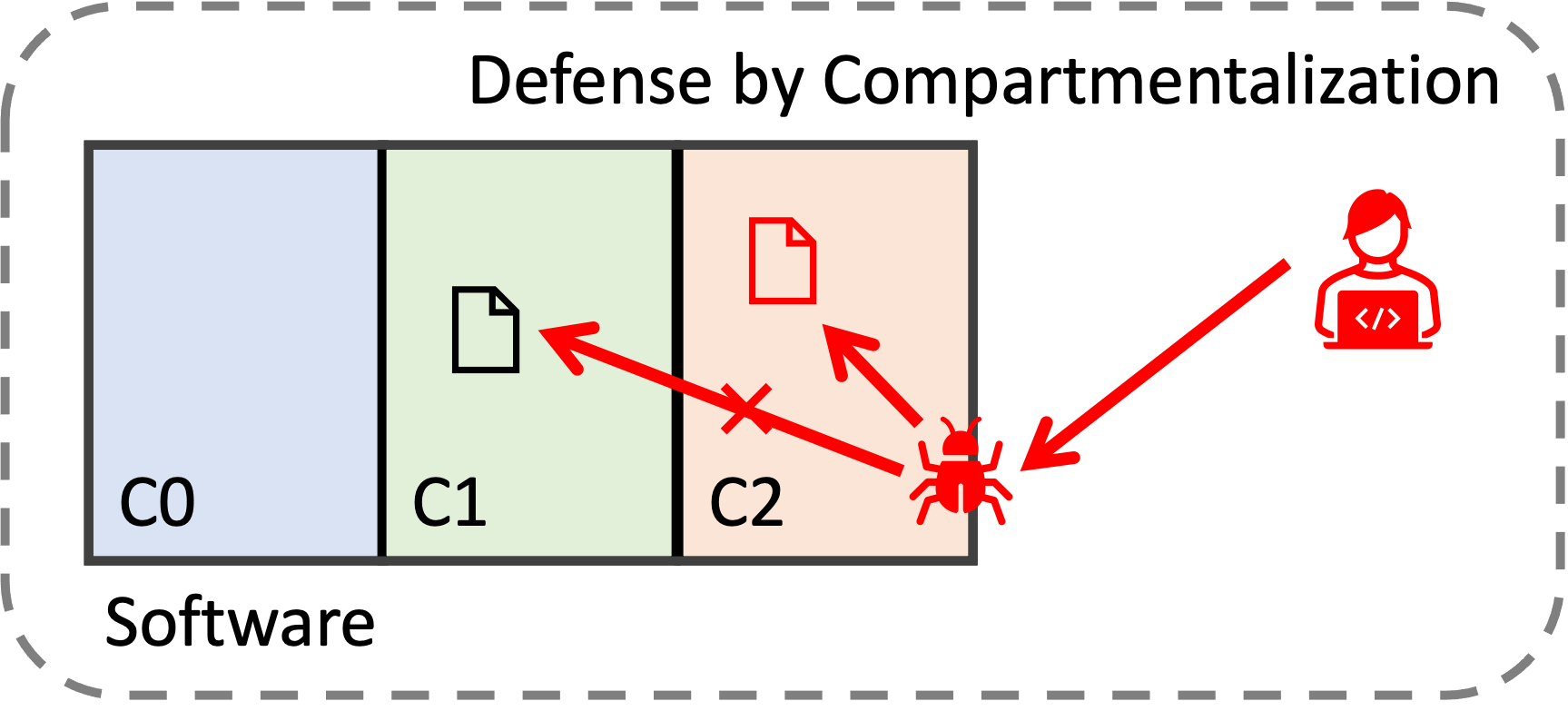

Software Compartmentalization

We continually experience security incidents, despite academic and industry efforts to eradicate software vulnerabilities. This is partially due to the expensive performance cost of strong generic defenses. This cost motivates us to move toward defenses tailored for specific domains, with relatively lightweight mechanisms that effectively mitigate attacks. Software compartmentalization is an approach that reduces the impact of one vulnerability by splitting software into pieces. Even if a vulnerability exists, the walls between the components prevent an attacker from breaking the security of the entire software.

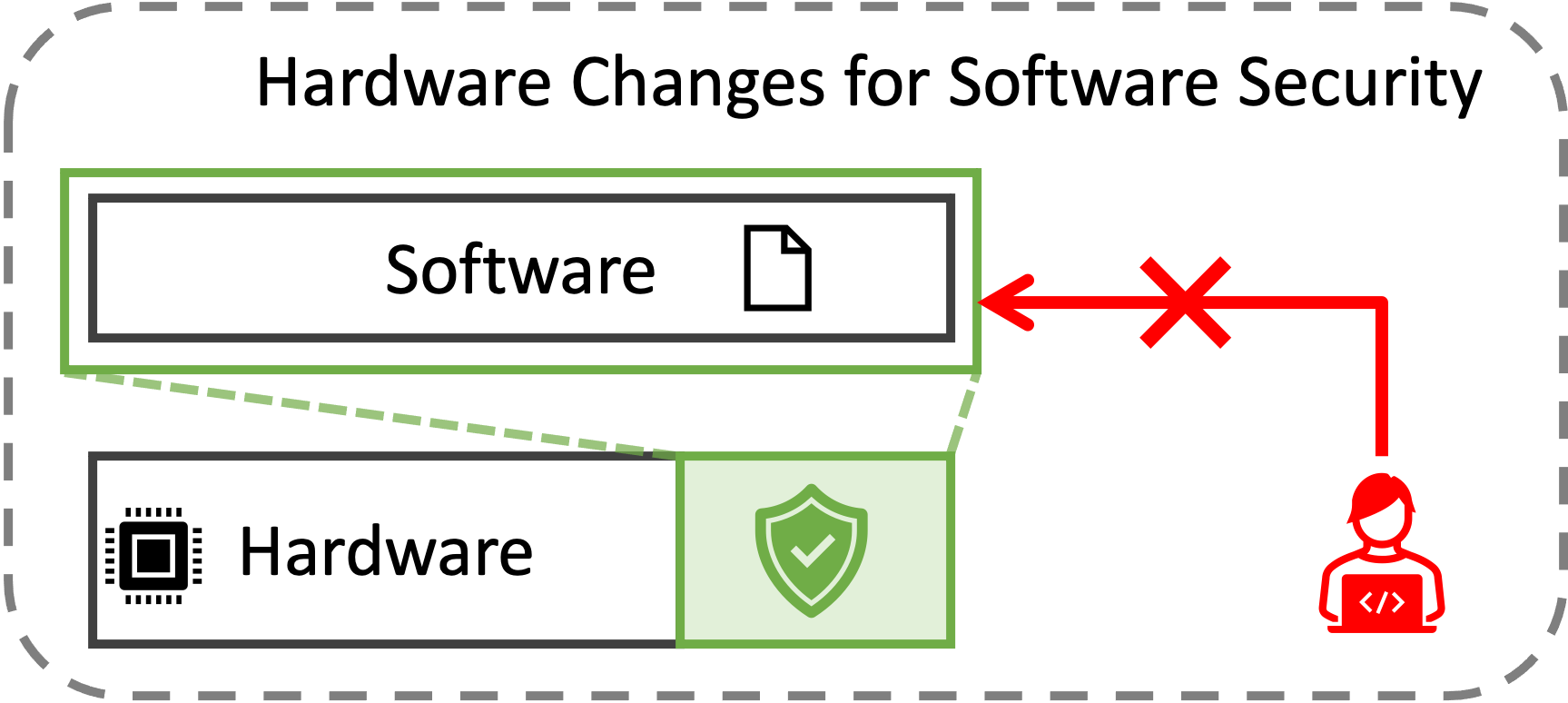

Architecture for

Software Security

Architectural security features are indispensable for protecting software. Software relies on these features to prevent many undesirable situations such as executing user software within an operating system kernel, modifying the program code, or reading another software’s data. The increasing concern regarding software security motivated recent processor designers to add even more sophisticated features to provide stronger security guarantees. Building on this trend, we aim to design new architectural features or to utilize recently proposed features more effectively and efficiently for software security.

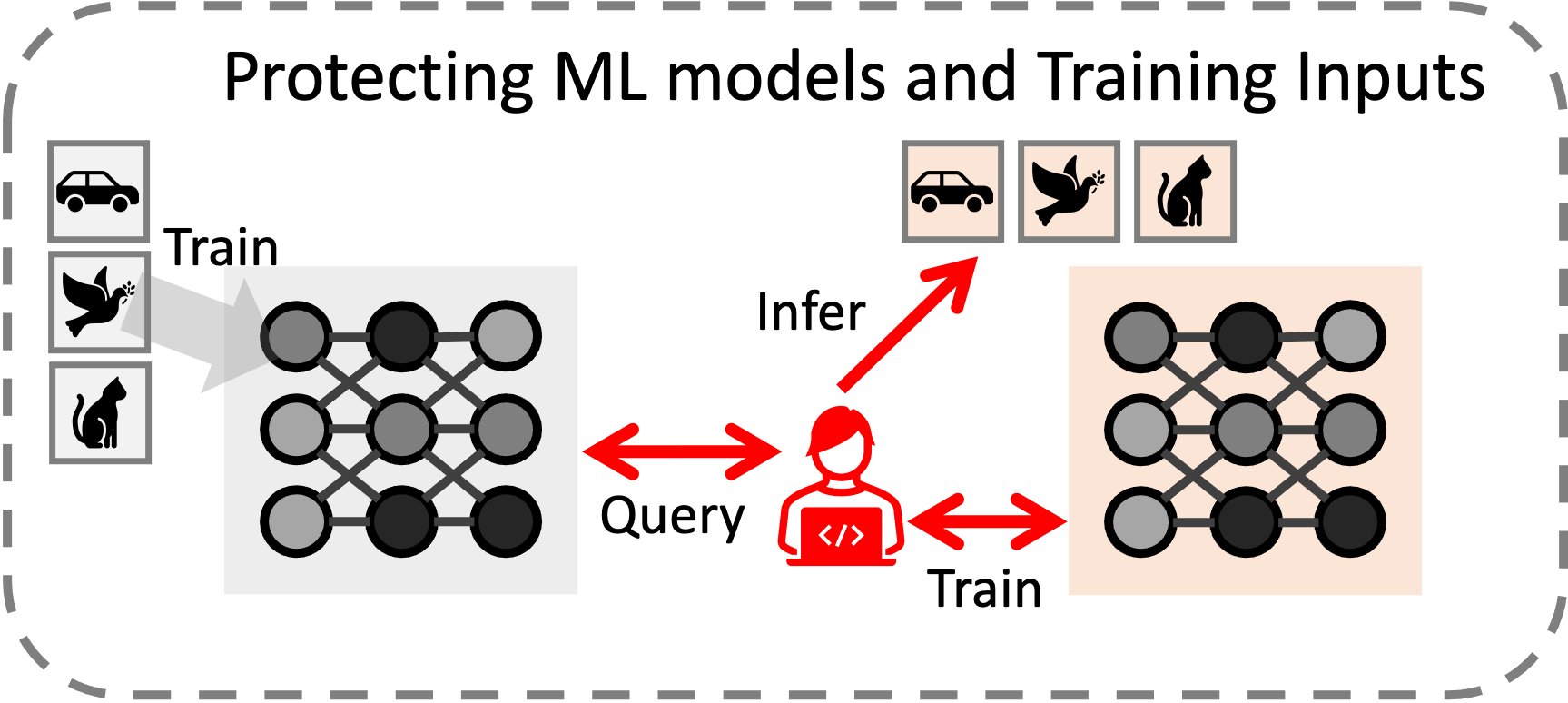

Security for

Machine Learning

The wide adoption of machine learning has raised concerns about the security of trained models and training inputs. Existing studies report that an adversary can learn about the input used for training the model, or even steal model parameters by querying them. Such events are to be prevented, considering the high dollar cost of training machine learning models and the potential privacy breach that would result from compromised training input. We aim to better understand this phenomenon and to devise appropriate defense mechanisms.